The Great Convergence

Differentiating in a World of Sameness.

First interactions with generative AI often feel like magic. With a few keystrokes, content – often nuanced and complex – emerges from simple prompts. And it is, indeed, in many ways, magic. It can summarize documents, generate code, and answer detailed questions. Cue Clarke's Third Law: "any sufficiently advanced technology is indistinguishable from magic". That said, the more you experience generative AI tooling, the harder it is to shake the sense that it's quickening a race toward... something less than extraordinary. Average, maybe. Just as big city downtowns and suburban shopping centers replicate the same stores and aesthetic, and just as interior design tastes cyclically align toward shared shades or fabrics, so too will the cheap availability of AI-generated content accelerate a convergence toward a milquetoast middle. That's not to say that it won't still be useful and effective, but rather, that it will be used in places where individualistic inputs are either too expensive to produce, or not required.

Given that we've recently celebrated the Fourth of July, E Pluribus Unum may just as well serve as the motto of the AI age: "From many, one", as our unique voices and styles are melded into a single voice by LLMs and AI technology. We are quickly learning to recognize the tenor and cadence of AI-generated outputs; there is a distinctive tone, with words that try just a hint too hard to sound smart and important, or are just a smidge off-kilter when mimicking casual conversation. It's the uncanny valley of sameness - all filling and no taste.

This is the Great Convergence.

Part I: Convergence to What?

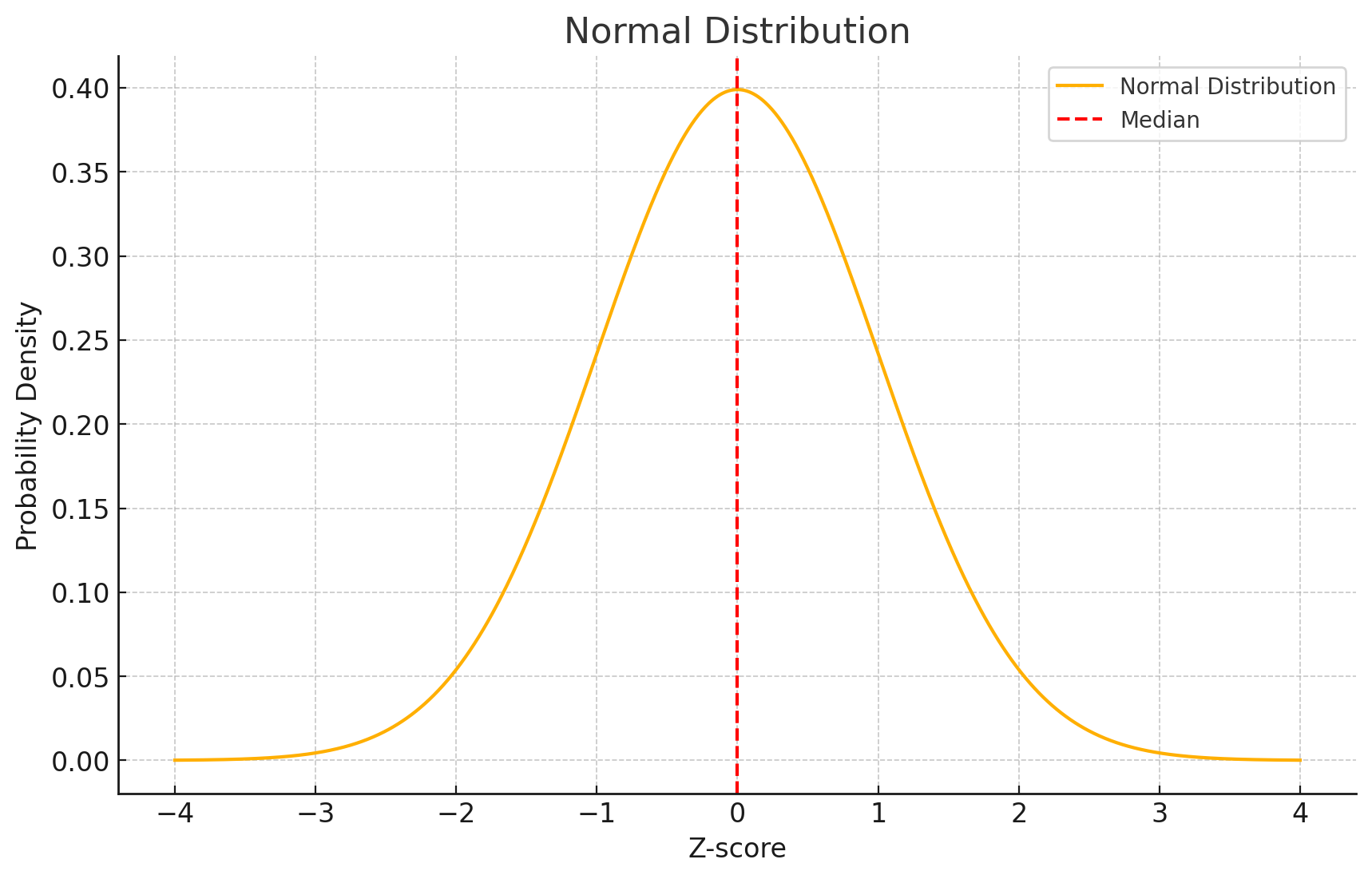

It's perhaps important to understand just why generative AI feels like magic. Let's assume, for the sake of argument, that the writing abilities of the general population follow a generally normal distribution, with brilliant writers on the right, and the functionally illiterate on the left. The middle point is the median, with 50% of the population distributed to either side. Now, let's assume that an LLM trained on written data produced by this population was able to replicate the average (mean) of this writing. For a normal distribution the mean and median are the same point, so approximately half the population would think "wow, this produces better outputs than me!", while the other half might think "I could do that".

But wait - the data that an LLM is trained on is key to this story. There are several more assumptions we have to make (note that these are illustrative assumptions, and aren't intended to represent a true technical overview of LLMs):

- That the content available for training skews toward the "better" end of writing abilities. That is, most bad writers don't create content that is preserved on the Internet, and the average Amazon reviewer or Reddit commenter is still a better writer than the portion of the population that doesn't write at all.

- That the content chosen for training further selects for specific categories and attributes - verified and credentialed sources, public domain literature, material that has been vetted by gatekeepers (newspapers articles, Wikipedia, etc.).

- That the models are optimized to prioritize good content, sorting for civility, professionalism, clarity, etc. OpenAI, for instance, employs Reinforcement Learning from Human Feedback (RLHF) to further “teach” the model so that it responds with high-quality replies. That is, human reviewers rank and label sample outputs from a prompt, in order to “reinforce” which outputs the model should prefer.

- That a significant amount of the best content isn't available for training (novels, literary journals, etc.), either due to copyright or paywall restrictions. This content also represents a significantly smaller proportion of the overall training corpus.

Once we correct for these assumptions, we start to see that an LLM trained on this subset of data isn't just returning a weighted average of the population's writing skills - it is going to return content that is significantly better than what much of the population could produce. And it's this part that feels oddly unsettling, that AI is converging - in terms of pure language - on a point that feels higher-end than what many people are capable of. And for those that are capable, the quality still proves surprising. That's not to speak to hallucinations and fact-checking, but rather, the machine's mechanical ability to string words and sentences together in a coherent and sense-making fashion, with a modicum of style. That convergence point is tempered somewhat by the fact that there is a large amount of "good to great" writing in the training data sets, but comparatively little (by volume) "excellent" writing. Even so, it sets a high bar.

So we're converging toward something that can, in an automated and expedient fashion, produce content that is better than something like, say, 70-80% of the population (in terms of clarity, grammar, and syntax – not creativity or substance). It can do it faster than we can, and on demand. And that's the part that feels like magic.

The tradeoff, of course, is that this content increasingly sounds like "AI content", through its choice of words, tone, and sentence structure. Humans are remarkable pattern-recognition machines, and we've quickly started to recognize the tell-tale signs of AI content.

Part II: Corollaries and Canaries

For some domains, "good enough" writing is fine. Prompts for general research and requests for recipes don't have to return fine art. I don't expect stylized replies to technical queries about databases. But what about when I'm creating content that I want to represent me? My family? My tribe? How do we define "better"? I fully understand I'm wading into a complex topic with too little time to do it justice. But still...

In addition to a convergence of content, we’re also seeing convergence of another form – that of capabilities. Once a machine that can write better than 70% of the population is available, it is inevitable that that machine will be put to work. If various tasks once required the inputs of many specialized (and expensive) people, then the machine opens the door toward a converged capability (content generation) through a single, automated lens.

How do we decide when this convergence is helpful, and when it is harmful? And further, how do we differentiate as individuals and collectives during this great convergence? For historical precedent, we can look to the model of the Swiss watchmaking industry, to which there are remarkable parallels to our current age of AI innovation.

The "quartz crisis" (alternately, the "quartz revolution" outside of Switzerland) was brought about by the introduction of cheap, accurate, quartz watch movements. It fundamentally reshaped the nature of the watchmaking industry over the course of several decades. While the crisis was a micro-disruptor in the grand order of events, the change it wrought reverberated for years across the world's historical watchmaking centers, causing chaos before the industry finally landed in a new state of stasis.

For generations, Swiss watchmaking was synonymous with quality. Skilled craftsmen had perfected the art, and Swiss watches sold at a premium given their reputation for accuracy and durability. That equation began to shift in the early 1970s, with the release of the first quartz wristwatches by Japanese manufacturers such as Seiko and Citizen. These organizations invested heavily in quartz technology, which proved immensely popular and profitable.

Instead of the painstakingly handcrafted mechanical movements that underpinned traditional Swiss watch technology, quartz movements rely on a tiny crystal oscillating at a specific frequency when an electrical current is applied. This oscillation is then stepped down to a more manageable 1 hertz, which converts the electrical pulses into the mechanical movement that drives the hands of the watch. This method provides a highly accurate, low-maintenance, and cost-effective watch mechanism. Swiss manufacturers, during this time, remained largely focused on their traditional mechanical mechanisms, resisting the technological tide.

Ultimately, this proved calamitous for the Swiss industry, wreaking havoc across a large swath of long-standing firms. Within two decades, more than 70k people lost their jobs (with 1,000 of 1,600 Swiss firms going out of business), and Switzerland's dominance had been replaced by Japan and the United States, two more tech-forward economies. Watches - much like information today - were no longer a luxury. Anyone who wanted to could now purchase an affordable, good-looking watch.

So we're all wearing quartz watches today, right?

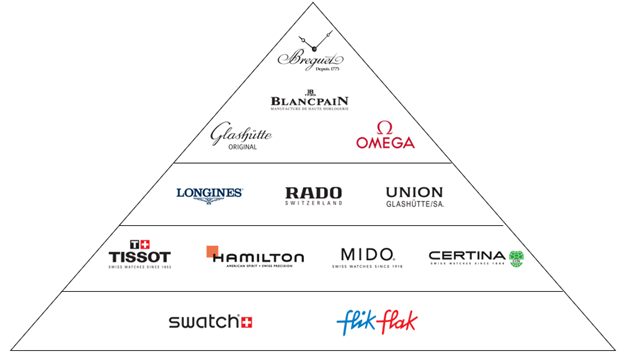

Maybe not. Switzerland came back. The Swatch Group consolidated many legacy brands under a single large ownership group and propped up the Swiss market. Under Nicolas Hayek's leadership, the Swatch Group invested heavily in automation and strengthened the firm’s management. Hayek introduced a robust, shared IT infrastructure and financial reporting processes, and favored “vertical integration as essential for maintaining autonomy”. An explicit brand differentiation strategy was developed, represented by the Hayek pyramid. Each brand clung strongly to their individual identities but relied on centralized manufacturing and infrastructure to support their operations. Swatch was a global success, and from this strong foundation, the Swiss watchmaking industry reemerged.

One result was that high-end watch brands switched from competing on accuracy to competing on brand identity. As capabilities converged, they could no longer reasonably claim that their devices were unique, or that their highly accurate features were due to complex engineering. As such, they couldn't charge a premium for those features. Instead, a focus on the individual consumer allowed each brand to craft a unique message. The quality of the delivery remained the same, but boutique watch companies were no longer selling watches, they were selling time pieces. They were selling status, luxury, and style.

And so they started competing on different dimensions - exclusivity and prestige, sportsmanship and heritage. Appeals to generational ownership. If you just needed to know the time, you weren't in the market for a Patek Phillipe or Jaeger-LeCoultre. Importantly, the Swiss brands built their moat around an advantage that couldn't be replicated - how their product made you feel.

Because of this pivot, Apple Watch and other smart watches were not the extinction event they were predicted to be. They competed along the same axes of brand and consumer identity that had been established decades earlier. They also pushed to open up a new market, with fitness trackers and smart devices claiming a piece of the overall market share, but never really competing head-to-head with high-end analog watches. They met different, complementary, needs for functionality and adornment.

So then, where's the parallel, you ask? Let's start with massive disruption in an established market brought about by rapid technological change and a sudden convergence of capabilities and costs. This then creates sweeping job losses, followed by a fundamental restructuring of the major players in the industry. It takes 15 years for the changes to fully echo through, and for players to organize enough to stabilize the "legacy" market. Once done, the legacy firms continue to thrive, but with a fundamentally altered market message and vision.

There are lessons on both ends. First, that disruptors armed with superior technology can enter a well-established market and upend it in a matter of years. Second, that established players can retool their operating models and messaging to continue offering a compelling product to consumers in the face of an erosion of demand for their core service offerings, brought about by the commodification (convergence of capabilities) of their offerings. We are on this cusp of this playing out today, as AI begins to shake the low-end parts of the knowledge and creative industries with the ready availability of "cheap expertise" and "good enough knowledge" wielded by upstarts. Firms must either defend or reformulate their value statement.

Part III: The Stories we Tell

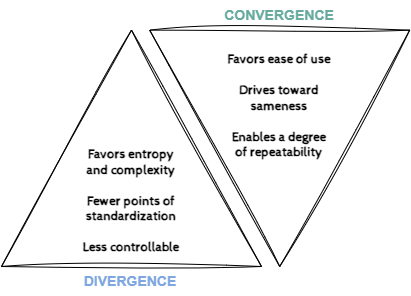

I anticipate that as the low end of the content and automation market blurs, we'll see firms employ two defense mechanisms. First, like the watch industry, they'll need to sell to an advantage of theirs that can't easily be replicated – i.e., they need to find new axes to compete on. It’s up to each firm to figure out what that advantage is, whether it's product, culture, brand, etc. Second, they’ll need to create a diverged knowledge source that remains separate and distinct from the converged knowledge provided by AI. Let’s call this “artisan knowledge”.

Artisan knowledge is pulled from non-public sources. It is deep, institutional knowledge. Artisan knowledge is purposefully incomplete - it requires a hidden hand to guide the final step towards implementation. Like a public/private key encryption algorithm, it exposes one piece of the puzzle openly, but requires a hidden personal key to actually unlock value. Artisan knowledge is verbal communication and handshakes, and deep experience. It can be codified, but not entirely. It injects human personalization and understanding into situations.

The trick is to combine the human advantage of artisan (divergent) knowledge with the productivity advantage of AI (convergent) knowledge to create competitive differentiation. Marrying the two provides powerful benefits and maintains the centrality of people, organizational expertise, and tacit knowledge in how works gets done.

Because actions are taken by individuals, not collectives, I'll offer one final question and one line of advice. First, the question. If you're considering generative AI capabilities, ask yourself: do I need to complete a task, or do I need this content to stand the test of time? If the latter, opt for hand-crafted. If not, use AI to save yourself enough time to work on something that does need to stand the test of time. You have a right to access the knowledge of the world, and an obligation to contribute to it. Do so in a way that matters.

Good writing encompasses three critical elements: style, substance, and story. That is, there should be a Big Idea that is carried forward by a Compelling Narrative and done so with a sweeping Command of the Language. Of course, that alone is not enough for writing to be great, for it to become timeless, because what is man if not a vessel for a soul? Great writing requires soul; it requires voice. While AI can touch on elements of the first three, it fundamentally lacks a distinctive fourth.

And so, in the spirit of humanistic AI, when you do deploy technology, make sure not to lose your own voice. Instead of the many melding to one, remember, as Whitman said, "I am large, I contain multitudes". Use your many experiences, your many thoughts, to create a unique voice. It doesn’t have to be polished. It doesn’t have to be precise. It just has to be you. And remember, you have to have a voice before you can automate it. Just as you have to have an idea before you implement it, you need to develop and refine your voice before you can be comfortable enough to extend it with AI. One of my greatest fears in this age of AI is not what happens in the next 3-5 years - it's what happens in the next 20-30 years, when whole generations have leaned so heavily on the technology that they've failed to develop a truly unique voice. Even worse, what happens when people begin to fear the unique voice because they are so unused to it? At that point, the convergence will be complete.

So, let's all just take a deep breath. Go ahead, breathe with me. This will move more quickly than we want it to, but more slowly than we expect. There is time yet for a swim in the lake and a walk through the field. Fire up the grill, throw on a dog, and take a moment to relax with friends and family. Practice being. At least for a day.