Sugar High

Once the AI sugar high wears off, will we be the better for it, or adrift?

We are approaching the age of the AI "sugar high". As firms invest in AI technology and AI-enabled workforces, we are seeing near-term productivity benefits that are, dollar for dollar, tantalizingly attractive. These gains are separate from the hype cycle – they are real improvements in productivity that can help improve financial positions and build competitive advantage. But at what long-term cost? Once the sugar high wears off, will we be the better for it, or adrift in a foreign landscape without the anchoring forces that brought us here? It is necessary to plan for what comes next, now.

Today, there exists a gulf between current value and future promise. There is a trillion-dollar investment being made that is so large it may never be fully recouped. Big Tech is spending heavily on the plumbing to make AI work - the GPUs, data centers, and power plants required to fuel this revolution. Much like how the early Internet era left us with miles of fiber cable and not nearly enough web traffic to fill the bandwidth, there will be a heavy capital investment required by the early players. What’s different this time around is that those players are well positioned to take advantage of the systems they are building. But once the infrastructure is in place, and the public becomes comfortable (enough) with the technology, smaller, more disruptive organizations will step into the fray with new products and new ideas. Like water, AI infrastructure will seek the path of least resistance to recoup the formidable amount of money poured into its construction, so it’s best for today’s firms to begin preparing with purpose.

We are in an era of cheap answers. While compute is expensive, Big Tech is subsidizing its use, and there are enough low-hanging problems that the payoff for organizations adopting AI can be significant. However that era will be short-lived and soon replaced by one where the costs of new competitively differentiated advances are significant. When this happens, organizations who have planned ahead will be those that benefit most. This planning requires more than just layering AI technology on top of existing processes, it requires a wholesale reimagining of the future of work – of work culture, expectations, and norms – if we are to collectively benefit from the technology.

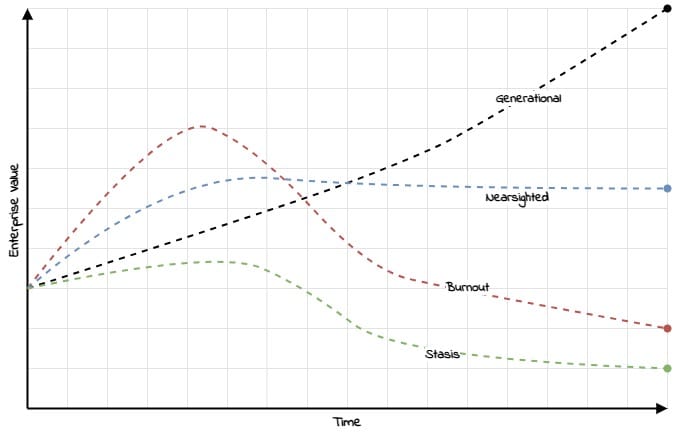

While the real promise of AI-enabled orgs is years away, the fact remains that there are real and tangible benefits to use now. There is opportunity to do things faster, better, and differently. AI-enabled capabilities such as transcription, summarization, and assisted editing are rolling out now. This then is the bridge to the future that we must all walk. It is rickety at the moment but, truss by truss, becoming more stable with each passing day. These benefits though, are ultimately just empty calories unless a long-term strategy is put in place to capitalize on them. As organizations begin to realize some very real improvements from their deployments of AI tools, we can expect four strategic use patterns to emerge:

- Reinvest the time (the generational track). Long-term growth.

- Cut roles (the nearsighted track). Short-term growth.

- Add more work (the burnout track). Short-term decline.

- Do nothing (the stasis track). Long-term decline.

The Generational Track

These organizations will use early productivity gains as a jumping off point into sustainable long-term value. They will do so by proactively investing both in technology and people, creating a virtuous cycle of value. Not all bets will pay off, but there will be enough resolve to treat investment as a long-term strategy. AI is part of a holistic approach to "work futurism".

The Nearsighted Track

Some organizations will choose to use productivity gains to eliminate roles. This isn't inherently bad if it helps the organization compete and grow, but this strategy limits their long-term impact. For organizations that are more tech-centric, this may be the correct approach, so long as the remaining talent is allowed to invest time in more strategic pursuits.

The Burnout Track

The team has more time available? Let's fill it with more work! While this may prove beneficial to the short-term bottom line, it risks further alienating a workforce already threatened by the encroachment of AI. Short-term productivity gains, without some degree of reinvestment in people and strategy will produce only short-term profits.

The Stasis Track

Or you could always just do nothing. In the short run, your team may relish the extra time, but unless that time is positively directed, you'll fall behind. While not all investments in AI are flashy, they are being made. The results will surface over time, and once they do, those who haven’t invested will find themselves playing from behind.

None of these tracks are mutually exclusive. Savvy organizations may deploy a mix of strategies, though I expect that those that can weight toward the generational track will be the most successful long term. These are all, of course, near-term strategic choices. And what waits ahead?

Planning Ahead

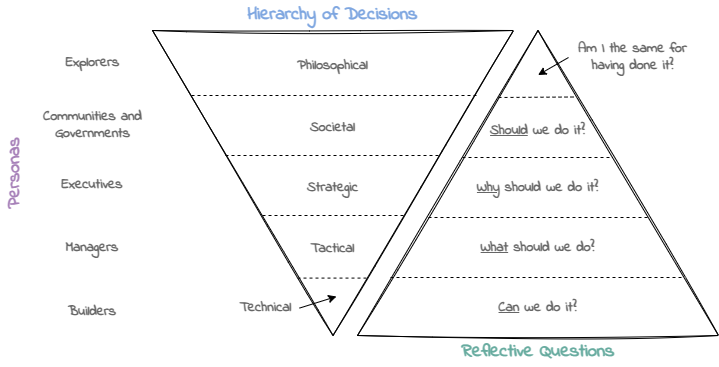

Much like the nuclear era, the great challenges of the AI era will be not the technical ones, but the philosophical ones. That is not to diminish the technical acumen required to build these tools, but rather, to say that the defining question will not be one of "can we?", but rather, "should we?"

I'm reminded of William Gibson's observation that "the future is already here — it's just not very evenly distributed". While the world carries on its existing trajectories, most are unaware that there's been a rupture in the fabric of human/technology interactions. That the world is quietly sleepwalking toward something very big. And once we awaken, we'll rub the sleep out from our eyes and marvel at how quickly everything changed.

Much like the nuclear era, the great challenges of the AI era will be not the technical ones, but the philosophical ones.

This macro-observation holds true for individual organizations too. When thinking about the impacts of AI, it's easy to index to the first-order impacts: productivity gains and job losses is how it's typically framed. Many commentators then jump to one of the more extreme outcomes ("massive societal upheaval", "world-ending war", etc.). We anchor to narratives of "AI is good" or "AI is bad". It is neither. And both. AI in the right hands will be transformative. AI in the wrong hands will be repressive. The trick is to guide those hands and the minds controlling them, and to create the right incentives and regulations. To do that, we need to educate ourselves, and to do that, we need to think generationally and strategically about potential outcomes.

So let's look at the impacts of AI.

First order impacts deal with the impact on people's actions and focuses on the individual. They include job losses, reskilling, elimination of rote work, and the creation of new opportunities through expanded productivity. The flip side is the devaluation of certain existing skills. AI will change the careers we pursue and those we guide our youth toward. Roles that are highly valued today will lose some of their luster. First-order AI impacts will affect organizations in different ways. We will see productivity gains without (many) lost roles in higher-end knowledge professions (law, medicine, consulting). We will see significantly larger-scale role losses in lower-end knowledge-based clerical roles (data entry, accounting, etc.).

Second order impacts deals with the impact on people's collective thoughts - in particular, how society operates. Second order focuses on how we assert control - who controls the technology, who influences the models, how we allow AI to be deployed. The impact and stakes are larger - surveillance states and surveillance capitalism. Loss of privacy. Impact to ego and sense of identity.

Third order deals with the impact on how ideas propagate across generations. The devaluation of core human attributes such as learning, writing, and languages. How we transmit knowledge to our offspring. How we learn. The degree of our co-dependence on technology and our overall sense of self.

When charting a path forward, we must all keep in mind not only what this means now, but how the decisions we make today impact those ahead of us. The technology is nascent enough to be malleable, so we must work to shape how it grows. The sugar high is just the start. We're at the beginning of realizing first order impacts, with the second and third order impacts only now coming into fuzzy view. We need to focus on sustainability while minimizing human harm. A degree of disruption is inevitable, and to some extent, a sign of health, but we must not let it get out of hand.

So then, let's encourage each other to use this sugar high to bootstrap ourselves into a sustainable cadence. A healthy meal plan, if you will. Let’s build a foundation that we can grow from, grow with, and grow into.