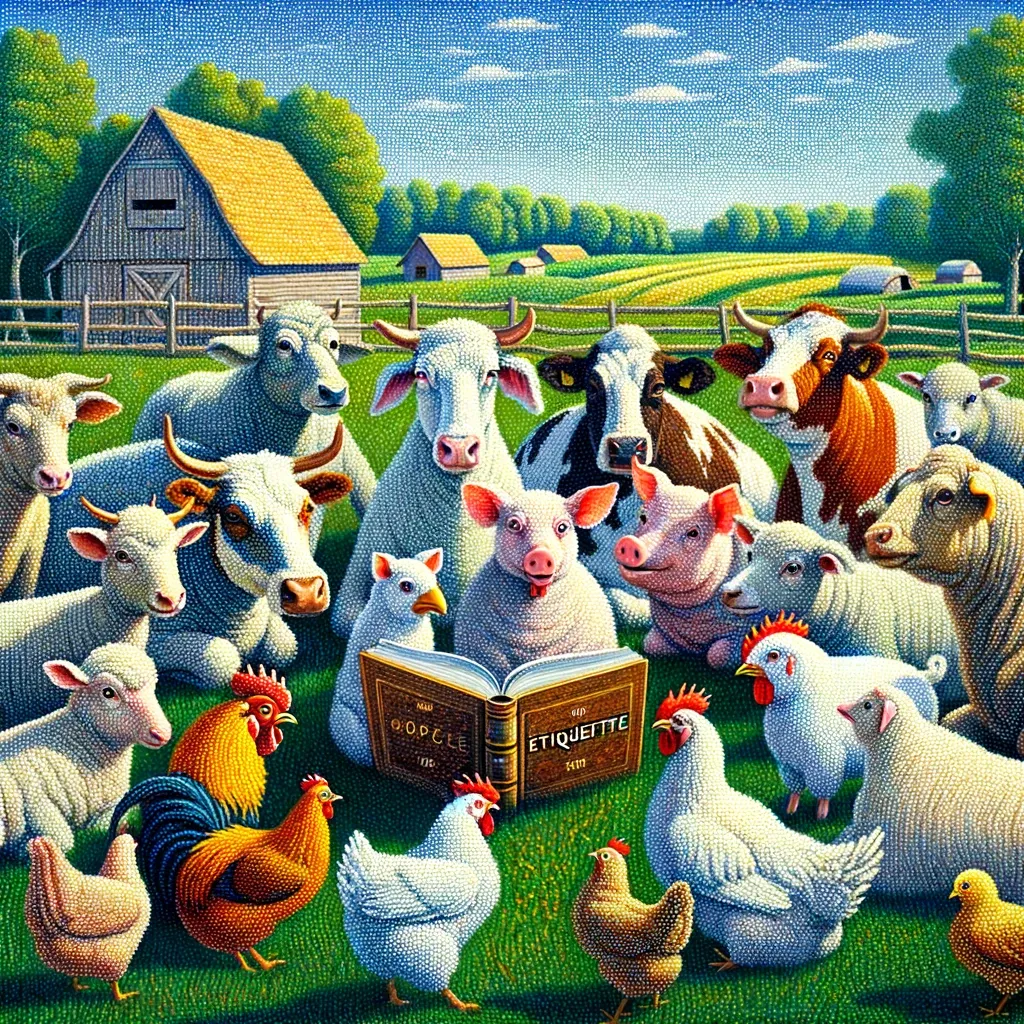

Mind Your Manners

On why we should speak politely to technology, what we gain by doing so, and what we stand to lose if we don't.

Think back to your last interaction with ChatGPT, Google Bard, or your bank's automated chatbot. Did you thank it for its help? Prefix your requests with "please"? Speak in complete sentences, even? While that may seem an odd thing to dwell on, we are quickly reaching a point in our AI interactions that prompts a question of etiquette. That is, when a technology appears human, should we treat it as such? What do we gain by doing so - or rather, what do we lose if we fail to do so?

I believe there are several reasons why we should "mind our manners" when interacting with AI:

- The ethical case: social norms, once deviated from, are difficult to reestablish

- The moral case: our outer actions are a reflection of our inner selves

- The humanistic case: manners afford us an opportunity to assert our humanity

I'm by no means suggesting that chatbots are sentient entities, or even deserving of our good social graces. Rather, this is a human-centered view on why it is still valuable to retain social norms in these interactions.

When Google's search box first appeared, we quickly learned how to optimize our requests. We skipped the formality of punctuation, dropped stop words and other filler, and adapted to the needs of the algorithm. But while we marveled at the speed and accuracy of the results that were returned, we never truly engaged as in a conversation, and felt no compunction to extend niceties to these interactions. Our interactions with chatbots are changing this - rapidly.

Ethical Case: Consistency is Key

We've spent years developing the muscle memory behind our verbal courtesies. Organizations - good organizations - understand and nurture the complex social interactions that ultimately create strong cultures. Over the years, this has evolved into what we now refer to as "professional" behavior. We say "thank you". We don't curse. We treat others with respect. Muscle memory is important, and even the interactions you have in private imprint in ways that impact your public daily interactions.

We also know that the world has a general tendency toward entropy. Norms, once broken, are hard to establish, and can quickly degrade. If we allow ourselves to be curt (or even worse, abusive) toward an AI, that interaction can quickly spill over into our other "real life" interactions. This risks coarsening the general dialogue, with detrimental effects on professional culture.

If then, one of the great challenges for AI in the years ahead is to preserve and curate accurate and reliable data sources for training, perhaps we also have an ethical obligation not to pollute the well. Garbage in, garbage out.

"But wait!", you say, "that's just silly! I can context switch and that is never really an issue". Fine. Maybe it isn't for you, but it will be for some.

For you, then, I refer you to the Golden Rule.

Moral Case: Do Good to Be Good

Perhaps all this is just too many words spilled over what could be simplified to one of our earliest lessons in social interactions: the Golden Rule. Treat others as you would like to be treated.

In all actuality, no one will ever know if you speak poorly to your chatbots. That doesn't mean that you should do so. Ethics aside, one may claim a moral obligation to act in accordance with normal social niceties. To be true to ourselves, we mustn't deviate from our values, even when we are able to do so silently.

There is a case here for practiced empathy and compassion. That the target of this empathy isn't human doesn't necessarily decrease the benefit of practice, perhaps any less so than if the target were canine or feline.

Humanistic Case: Flex your Humanity

And if neither of those arguments have swayed you, then consider a simple plea to flex your humanity.

Generative AI technology represents a marked shift in how we interact with technology. We have a choice, then, in the how. And there may be no better way to assert our humanness than to interact in a way that (1) extends entirely unneeded courtesies, and (2) does so in full face of a loss of productivity while doing so.

I'll posit a corollary as, "the culture of an organization can be judged by viewing its AI chat prompt history". Were this data actually available, I'll wager that not only would it be a trove of how an organization problem solves, but also a leading indicator of cultural health.

Conclusion

Be nice.