Intelligent Awareness

A Cognitive Framework for Humanistic AI

I've been thinking recently about John Henry and the Steam Engine. You might know the tale (or the song, for those Johnny Cash fans out there). The best steel driving man for the railroad, John Henry was renowned for his strength and endurance. One day, as the rail line approached an impassable mountain, a new steam engine drill arrived on the job site. John Henry didn't like the idea of a machine taking away his livelihood, and raced the engine to see who could drill the furthest in a single day. Once the dust settled, everyone saw that John Henry was victorious, having out-drilled the steam engine by several feet. We all know how the story ends though, because that's the story we're living today - John Henry died from exhaustion, and the steam engine kept on drilling the next day. Over the years, technology slowly – then abruptly – surpassed raw human strength.

21st century conversations about AI have an eerily similar feel, and I've been having plenty of those conversations lately with friends, colleagues, and acquaintances. Namely, about the impact of AI - what we gain, and what we lose as it encroaches on more and more aspects of our lives. I've been grappling quite a bit with how to engage with AI, to what extent that engagement is inevitable, and if it is, to what degree we should participate in a process that may ultimately erode the role that knowledge plays in our lives.

Right now, the best way to describe AI is as an early-stage cognitive steam engine. It's powerful, unwieldy, hugely inefficient, and on a trajectory to tremendously reshape our everyday lives. At present day, we're largely able to outthink it, and can point to its more obvious hallucinations as examples of why it won’t compete with human intelligence. One-on-one, smart humans can still outperform smart AI in general knowledge (for limited, specialized knowledge on the other hand, it is much easier to train expert-level AI). That said, it costs us time to do so, and time is ultimately what John Henry ran out of.

Right now, the best way to describe AI is as an early-stage cognitive steam engine. It's powerful, unwieldy, hugely inefficient, and on a trajectory to tremendously reshape our everyday lives.

So then, how should we adapt? We don't drill tunnels by hand anymore. We've learned to drive the machines. AI is an epoch-defining technology, not dissimilar to the industrial revolution. And as that revolution unfolds, we will need new approaches to knowledge work, ones in which we are more than just input engines and conduits to AI. We need approaches that allow us to think and to learn from what we have gathered through our tools. We don't need to position ourselves directly against AI to do so. At some point, as with the steam engine, competing against a machine in domains where the machine is exponentially better is not a winning proposition. That said, this can be a symbiotic relationship, one in which the tool is augmentative and not reductive. We need to develop new frameworks to guide this relationship.

I'll pose the idea of Intelligent Awareness (IA) as a step toward this framework. If AI is non-human, IA is uniquely human. It is a conceptual model for working with GenAI tools that centers the user and their individual thought process, in alignment with the principals of what I'll call Humanistic AI. Humanistic AI nods toward the inevitability of some aspects of AI adoption, providing guidance for humans to harness it effectively while preserving those attributes that make us, well, human. Humanistic AI is an attempt to govern why and when to interact with AI, and how to do so in a way that allows our souls to breathe freely, and not suffocate under the weight of the digital world around us.

Humanistic AI is an attempt to govern why and when to interact with AI, and how to do so in a way that allows our souls to breathe freely, and not suffocate under the weight of the digital world around us.

If we are intelligently aware, what are we aware of? Simply put, of ourselves, our tools, and the questions we seek to answer. Intelligent Awareness starts with the premise that hard thinking is valuable, and that AI tools can add to that value in a meaningful way. That there is inherent value in grappling with an idea over an extended period of time. If you aren’t bought into that premise, and simply see AI as a way to shortcut the thinking process, then IA doesn’t apply.

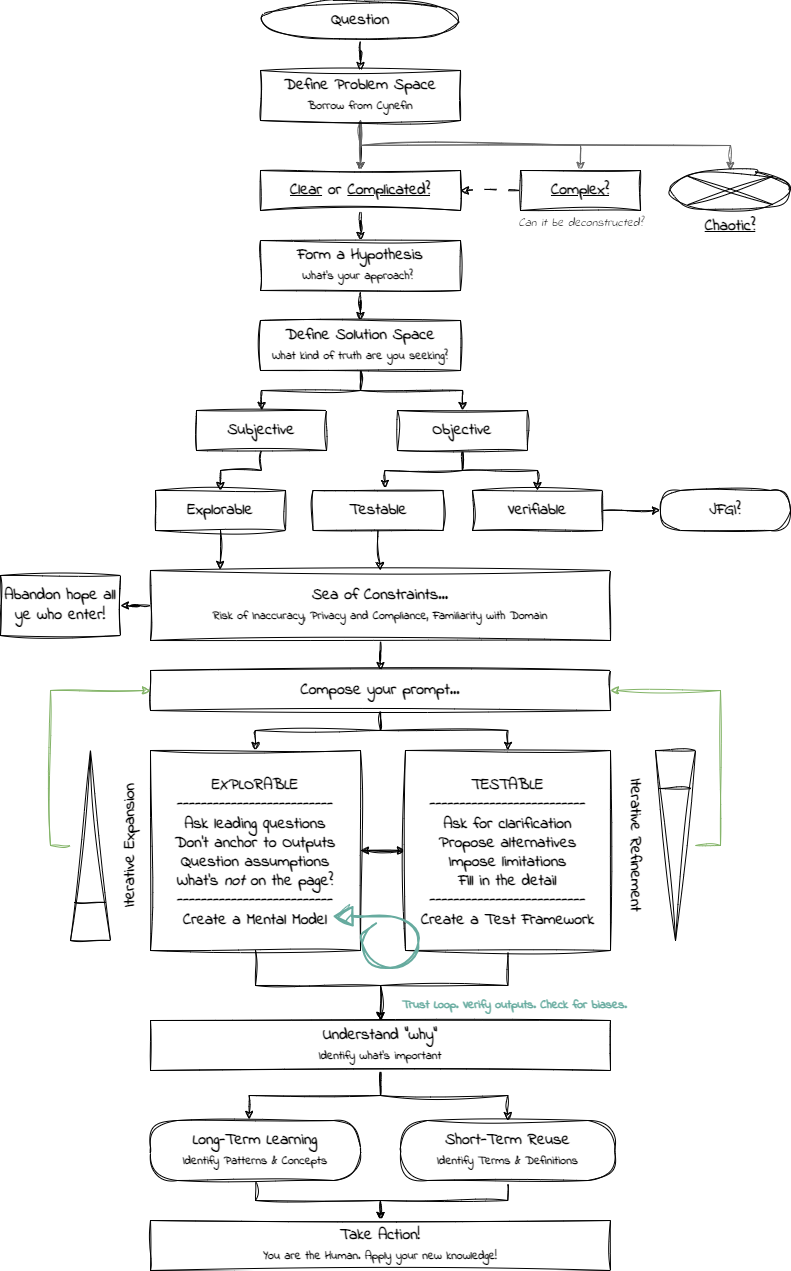

The Intelligent Awareness Framework

The IA Framework is intended to help us deconstruct the use of generative AI in a manner that ensures we are doing so meaningfully, responsibly, and sustainably. It should be viewed as a more contemplative corollary to prompt engineering, providing a mechanism for organizing our thoughts and running them through the gauntlet that AI tools such as ChatGPT, Copilot, Claude (and others) provide. These tools can be immensely helpful in synthesizing information. They can also be compellingly misleading, both in what they say, but more tellingly, in what they don’t say. To both protect us and reap as much as possible from the tooling, we need governance frameworks for our thought processes.

Applied correctly, and with intention, IA can help us speed up our natural thinking and learning cycles. So, without further ado, let’s take a look at the model, and walk through some of its elements.

It all starts with a question. Choose the right question. Understand why you're asking it, and what you hope to accomplish. If you don’t pick the right question (i.e., problem to work on), none of the rest of the process matters.

Next, define the problem space. I've borrowed from Dave Snowden’s Cynefin framework here, if only because I like its segmentation of problems into Clear, Complicated, Complex, and Chaotic. Right now, the problems best solved by AI are those that are Clear or Complicated.

Complex problems are bound by multiple dependencies and are less well suited to AI. Current AI implementations can perform limited complex reasoning, but more as the exception than the rule. If, however, a Complex problem can be deconstructed into parts, you can consider feeding the constituent parts into the model and using your own complex reasoning skills to stitch the solution together.

Chaotic problems, due to their need for potentially novel solutions, require fundamentally different approaches and are best solved outside the bounds of a defined framework (i.e., if a solution hasn’t yet been developed, it’s likely not well represented in a foundational model, which has a fixed knowledge base). You'll need a different model, preferably one more stochastic in nature. While AI is non-deterministic, it’s non-deterministic within narrow boundaries and if you want to model a chaotic problem, existing generative AI systems will limit your effectiveness.

Form a hypothesis. By forming an independent hypothesis, you protect against anchoring to the AI’s initial outputs. This hypothesis also helps inform your choice of solution space. In skipping this step, you're more likely to accept the solution provided, even if it is generic, incomplete, or just plain wrong.

What type of solution best fits the problem? To get a better handle on this, you must define your solution space. Are you seeking an objective or a subjective solution? Objective solutions must be either: Verifiable (proved with evidence) or Testable (proved through execution, such as code). Subjective solutions should be Explorable. If your problem is Clear and your solution is Verifiable, you're best off just Googling it, as that will provide a clearer, more direct pathway to an evidence backed answer.

If you've made it this far, it's time to pause and understand the constraints. This is important. Do you know enough about the problem domain to accurately judge the quality of the GenAI results? Do you trust yourself to spot hallucinations? Are all compliance and privacy needs being met? Have you developed a trusted relationship with the platform you're using (i.e., have you used it successfully in the past, and do you generally trust its quality and security)? Finally, ask yourself “what's the risk of getting it wrong?” Think hard about your risk tolerance for inaccurate results.

If you've successfully navigated the sea of constraints, it's time to compose your prompt. This is your initial input to the GenAI tool. You're now about to begin a journey of either iterative expansion or iterative refinement. Both journeys drive toward a solution, but they do so in opposite, complementary ways. You may find that you employ both techniques as your problem, and, by necessity, your solution evolves.

Iterative refinement works toward a testable solution by incrementally revealing a more complete outcome. It is best used for problem spaces like coding, analytics, architecture implementations, etc. It moves toward increasingly tightened-up, constrained answers. Along the way, will you want to post prompts that:

- Ask for clarification.

- Ask for alternate approaches.

- Ask for limitations to the provided approach.

- Fill in the detail.

AI will often provide an outline, but it’s up to you to know enough about the domain to guide it toward a more complete answer. For example, if I’m looking to understand how to develop a web scraper in Python, ChatGPT may provide a very good first-pass solution. But do I know enough to actually run it? Can I set up a virtual environment, select an executable, and pip install the dependencies? Do I know how I will move a single script from local development to a production deployment? Expect to iteratively expand into each of these categories, then iteratively refine into specific solutions.

The counterpoint to iterative refinement is iterative expansion. Here, we are deliberately looking to "land and expand”. Gather information, probe for weak points in the arguments, and drive the conversation toward a more robust solution. Think of it like landing on the Wikipedia page for Siberian Tigers at 9pm and then finding yourself reading an article about the Seven Years’ War three hours later. Explore and expand by looking for ways to build from the provided content. And remember, it's okay to have an opinion. You don’t have to be objective. Steer the conversation where you’d like it to go.

- Ask leading, open-ended questions.

- Ask for explanations and rationales.

- Don’t anchor to the outputs provided.

- Ask for additional considerations.

- Look for what’s not on the page.

In both of these process, you should plan to employ the concept of the trust loop. What's a trust loop? In short, it's the process by which we verify outputs and check for biases. Does this response make sense? If so, does it have an agenda or an opinion? Is the response reinforcing known stereotypes or biases? It's not necessarily wrong for an AI response to have an opinion - we simply need to ask ourselves whose opinion it is, why it's there, and whether it aligns with our own world view.

In the penultimate step, you should ask yourself – do I care enough about this task to intentionally learn from it? If so, I need to determine whether it is important to me in the long-term (perhaps due to intrinsic interest, self-improvement, etc.) or if it is valuable because I plan to reuse it in the near-term. This should help you decide the extent and process by which you understand and learn from what you’ve done. Long-term learning favors pattern recognition and abstraction and identifying key concepts. Short-term retention favors identifying definitions and key terms and committing them to memory.

Finally – take action! Do something with your new knowledge! Grow it, share it, use it. AI alone never solves a problem; solutions must be applied to be valuable, and it takes people to apply those solutions.

Conclusion

AI is a thinking aide. It is not an answer engine. Choose your problems and your pathways appropriately, and it can be massively enabling. Choose them poorly, and you risk crippling the learning process. The IA model isn't intended to be an explicit process or a conscious checklist. It's simply a guide - pick the parts that work well for you and try to align the concepts with how you work best.

Tech firms are only too happy to make technology ridiculously easy to use. The advent of cloud computing and storage have made millions of people comfortable with the idea of outsourcing important capabilities and processes, both business and personal, to major corporations. And it's true, for most people and organizations, the benefits clearly outweigh the negatives. In the age of GenAI, Big Tech would like nothing more than for the great mass of humanity to outsource their critical thinking skills to privately controlled entities, in exchange for a modicum of convenience and productivity gains. Control over how people think, is, after all, the ultimate in vendor lock-in.

To some degree, the IA approach accepts the inevitability of AI becoming integral to how we perform professional knowledge work. One may choose not to engage AI in their personal endeavors, but I expect that within professional circles, disengagement will soon cease to be an option, as the benefits to adoption become too large to ignore. While AI will increase in consequence in the business world, it is important not to allow AI to overwhelm our personal selves. It is a fine line to walk. In this future world, retaining agency in our interactions with AI systems will be more important than ever.

When you do use AI, force yourself into conscious interaction. Done well, AI use should feel just as difficult as writing on a blank page of paper, with all the requisite gray matter cells blinking on and off. It is up to all of us, and each of us, to shape a future where tools are mere supporting actors in our grand play of life, and we hold the stage and the spotlight. Humanistic AI is merely a starting point – one that will have to evolve as our world adapts to this new reality. It is a necessary blending of the digital and the analog, and an opportunity to set a course rather than drift toward an uncertain future. I welcome the conversations and input to come and hope to expand further upon the notion of Humanistic AI in the future. But we have to start somewhere.